The “One-Click” AI Video Factory: How Creators Are Scaling YouTube in 2026 Using Mostly Free Tools

Stop thinking you’re losing to “more talented” creators.

In 2026, YouTube rewards a different advantage: leverage.

The channels growing the fastest aren’t necessarily staffed by pro animators or editors. They’re the ones who’ve built a simple system that turns a single idea into a repeatable pipeline—script → scenes → visuals → motion → voice → edit—without spending thousands on software or freelancers.

This workflow is exploding because it solves the real bottleneck of modern YouTube:

Consistency + quality + volume… without burning out.

Below is a practical, creator-friendly system you can run with mostly free/low-cost tools, including Google Whisk for bulk visuals, Meta AI for image-to-video animation, and Gemini TTS / AI Studio for voiceover. (You can swap tools as needed—but the pipeline stays the same.)

Why “Leverage” Beats Talent on YouTube Now

Most creators fail for one reason:

-

They can make one good video…

-

But they can’t repeat it every week without their life falling apart.

YouTube doesn’t just reward quality. It rewards consistent output.

So the new play is simple:

-

Build a repeatable content format (same vibe, same structure)

-

Automate the production steps that don’t require “human magic”

-

Reserve your time for the only parts that matter: hook, story, packaging

The 6-Step AI Video Pipeline

Step 1) Generate the script (the “brain” of the video)

Your script isn’t just narration—it becomes your entire production blueprint:

-

total duration

-

number of scenes

-

scene descriptions

-

image prompts

-

character profiles (for consistency)

Many creators use Gemini for this step because it’s strong at structured outputs and story formats. (You can use any LLM—Gemini, ChatGPT, Claude—the key is the prompt + structure.)

Pro tip: Ask the model to output in a repeatable template:

-

Scene 1–24

-

For each: narration + visual description + image prompt + camera/motion notes

Step 2) Separate image prompts from the script

You want a clean list of prompts—one per scene—so you can mass-generate visuals.

This takes seconds if you tell your model:

-

“Extract only the image prompts from Scene 1–24”

-

“Return as a numbered list”

-

“Keep character descriptions identical across prompts”

Step 3) Bulk-generate all scene images (Google Whisk + Auto-Whisk)

Here’s where the workflow becomes “factory mode.”

Google Whisk is an experimental Google Labs tool that lets you create images using images as guidance (subject/scene/style) and remix quickly.

To scale it, creators use a Chrome extension like Auto-Whisk that batch-sends prompts and automates downloads—so you aren’t generating images one-by-one.

Why it matters:

Bulk generation turns “hours per video” into “minutes per video.”

Step 4) Lock character consistency (the make-or-break move)

If your characters “shape-shift” every scene, viewers feel the uncanny break—even if the visuals look pretty.

The fix most creators use:

-

Generate each main character once

-

Use that character image as a consistent “subject” reference inside Whisk-style workflows

-

Then generate the full storyboard again with those locked references

Whisk’s concept (subject/scene/style inputs) is literally designed for remixing and maintaining a consistent essence across generations.

Step 5) Turn images into video (free-ish image-to-video)

Now you animate each scene image into motion clips.

Two popular options mentioned in workflows like this:

Option A: Meta AI (image → video)

Meta’s own help docs show that you can generate images and then turn them into videos, including animating uploaded images.

Option B: Grok Imagine

Grok has a dedicated “Imagine” portal positioned as image/video generation.

Reality check (important): “Free” tools often mean free tier, daily limits, region restrictions, or account-required. Build your pipeline assuming limits exist—then batch work in sessions.

Step 6) Add voiceover (Gemini TTS / AI Studio + optional ElevenLabs)

To turn your story into a real “watchable” video, voice matters.

Gemini TTS (via Gemini API / AI Studio)

Google documents that Gemini can generate single-speaker or multi-speaker speech and points you to “Try in Google AI Studio.”

Optional: ElevenLabs

ElevenLabs offers a free tier, but details vary by plan and may include constraints (like character limits and usage terms). Verify inside your account before monetizing content.

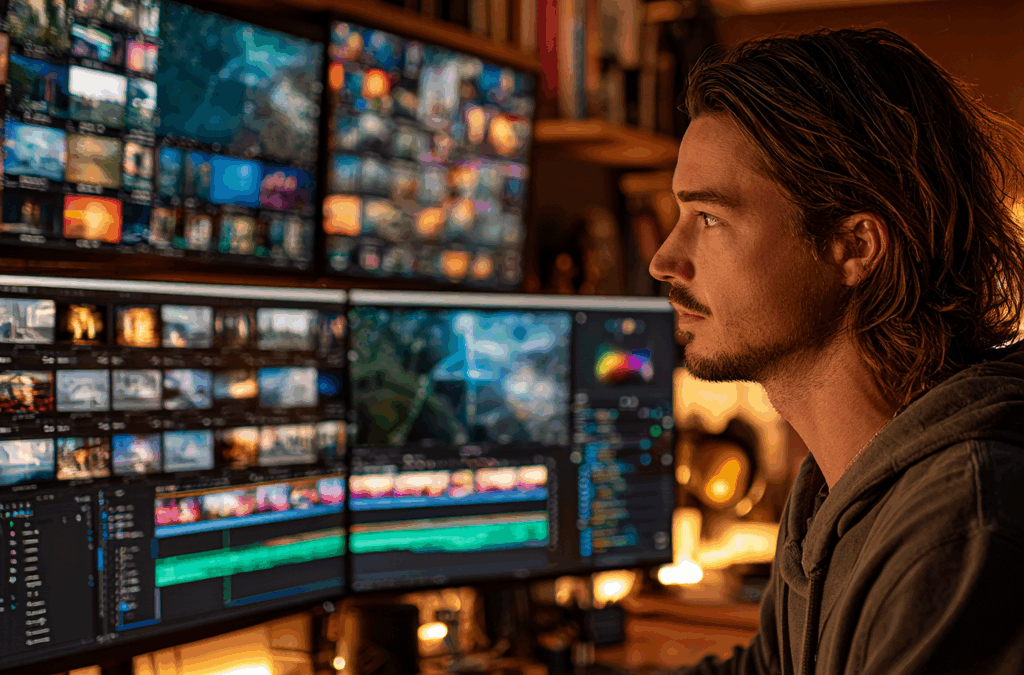

Editing: Where Your Video Becomes “Real”

Once you have:

-

24 short video clips (animated scenes)

-

a voiceover track (or voiceover per scene)

Bring it into your editor and do three things:

-

Sync clips to narration (adjust clip speed to match)

-

Add subtle transitions

-

Add light background music (keep it low under voice)

Many creators use CapCut because it’s fast and friendly for this style of editing, and CapCut publishes guides on quality/export/upscaling workflows.

The 2026 Advantage: YouTube Rewards “Smart Volume”

This is the meta-lesson:

-

A great creator who posts once a month loses

-

A solid creator with a repeatable pipeline wins

Your goal isn’t “perfect art.”

Your goal is:

-

consistent packaging (titles/thumbnails)

-

consistent storytelling structure

-

consistent output

That’s how small channels become big channels fast.

A Simple System You Can Copy (Without Getting Overwhelmed)

Start with one format:

Pick one:

-

“short cinematic stories”

-

“mini-documentaries”

-

“bedtime calm fiction”

-

“AI history tales”

-

“motivational narrative shorts”

Then build your reusable assets:

-

2 main character profiles

-

8–12 background “scene types”

-

1 script prompt template

-

1 export template (1080p, consistent pacing)

Then do production in batches:

-

Day 1: write 4 scripts

-

Day 2: generate all scene images

-

Day 3: animate scenes into clips

-

Day 4: voiceover + edit + schedule

Batching is how this becomes scalable.

Recent Comments